When the Vendor Defines “Responsible AI”

Why HR’s cultural lens isn’t enough to govern AI without the technical mandate of a CDO.

When veteran Human Resource leaders ask questions like “Can AI Care For the Worker?” or praise AI’s potential while their organizations wrestle with reputational harm, my ears perk up. It’s not hypocrisy—it’s a signal. A sign that leaders who care deeply about people may still be making AI decisions in isolation from those who understand the technology’s long-term implications.

Everyone loves a “responsible AI” headline.

It suggests the messy work of ethics and governance has already been handled, that somewhere in the design process, morality got built into the code. But safety and responsibility aren’t permanent settings. They are governance outcomes—the product of oversight, accountability, and the authority to say no to specific behaviors and uses, not just broad principles.

The Real Decision-Makers

In many organizations, AI adoption decisions are often directed to those charged with evaluating cultural fit and employee experience. HR leaders are asked: Will people use it? Will it make work better?

What they aren’t always asked: Where will the data go? How will the model behave over time? What happens if it drifts away from our values?

That’s not a criticism of HR; it’s a reminder. Culture and data culture are still treated as separate mandates, with different leaders, budgets, teams, and skills. But when AI touches both employee well-being and enterprise data flows, these worlds have to meet. If they don’t, the vendor ends up writing the rulebook.

The Drift Moment

Drift starts the moment data governance is reduced to feature settings rather than independent oversight.

Features can be thoughtful. They can even reduce harm. But if the same company that builds the system gets to decide what “responsible” means, and no one outside the vendor has veto power, you’re not governing technology. You’re outsourcing governance to the manufacturer.

These companies all have robust data governance and cybersecurity policies. In every industry, self-policing eventually bends toward self-interest.

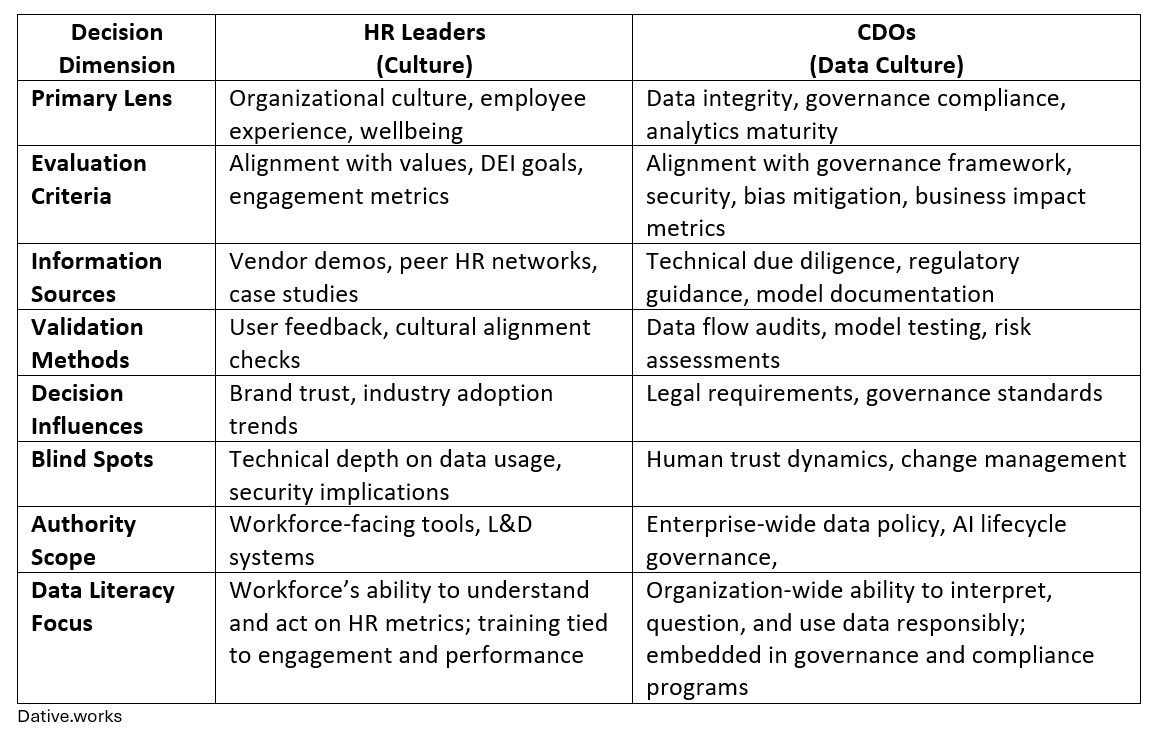

The Structural Gap: HR vs. CDO Roles

This isn’t about competence. It’s about alignment.

HR owns the cultural health of the organization.

CDOs/CIOs/CTOs own the health of the data ecosystem.

When AI affects both, decision-making must overlap. Yet even the most capable CDOs can’t make organization-wide changes without strategic partnership, shared authority, and joint accountability.

Even when the leader responsible for the data wants to bridge that gap, they’re rarely budgeted, resourced, or empowered to make organization-wide changes. Without careful strategy, intentional change management, and deep partnerships, data leaders face a high failure rate (nudging them toward automating these “messier human elements” as much as possible).

The Governance Illusion

It’s tempting to believe that features like nudges to take breaks or prompts to reflect before making a big decision represent “responsible AI,” caring, or even an aspect of well-being in an organization’s culture.

They don’t.

They represent a product team’s interpretation of responsibility. That alignment might hold today. Tomorrow, it might not. Without enforceable structures outside the vendor’s control, “responsible” becomes another marketing claim.

The Mental Model

Think of responsible AI in two layers:

Feature Layer: What the tool does for users (nudges, reflective prompts, content filters).

Governance Layer: Who decides the rules, enforces them, and ensures they outlast the vendor’s current strategy.

Without the second, the first is cosmetic.

Stewardship in Practice

Good stewardship means vendors don’t get the final word on what “responsible” means. It means HR and CDOs making decisions together, backed by enforceable governance frameworks, external audits, and clear exit criteria before the first login.

If your AI adoption process doesn’t include both cultural and data-cultural lenses, you’re not governing. You’re hoping for the best.

Strategic Pause

This is where I ask you to slow down and sit with the discomfort.

If AI is being evaluated in your organization right now, ask: Who outside the vendor gets to say no? If you can’t answer that in one sentence, the vendor is governing the tool.

Before approving any AI system, put HR and the CDO in the same room. Make them co-sign the decision. Define the governance triggers that would shut the system down, before the first employee ever uses it.

Then ask yourself: if this tool quietly rewrites the values you meant to protect, could you defend your decision in public three years from now?

Markets can recover from a bad purchase. Your credibility may not.

Leaders who don’t design for reflection inherit ritual. Agency breaks the pattern. Governance sustains progress.