The Story Beneath the Story

As AI capabilities accelerate, so do the stories we tell about them. Some are forecasts. Some are frameworks. Some are fog. In this post, I bring together two projects—AI 2027 and a blog post series, Narrative Control in a Disoriented Age—that attempt to make sense of both the systems we’re building and the meanings we assign to them. One charts a future. The other reads between the lines. Together, they help us ask not just what’s happening, but who gets to explain it—and why that matters.

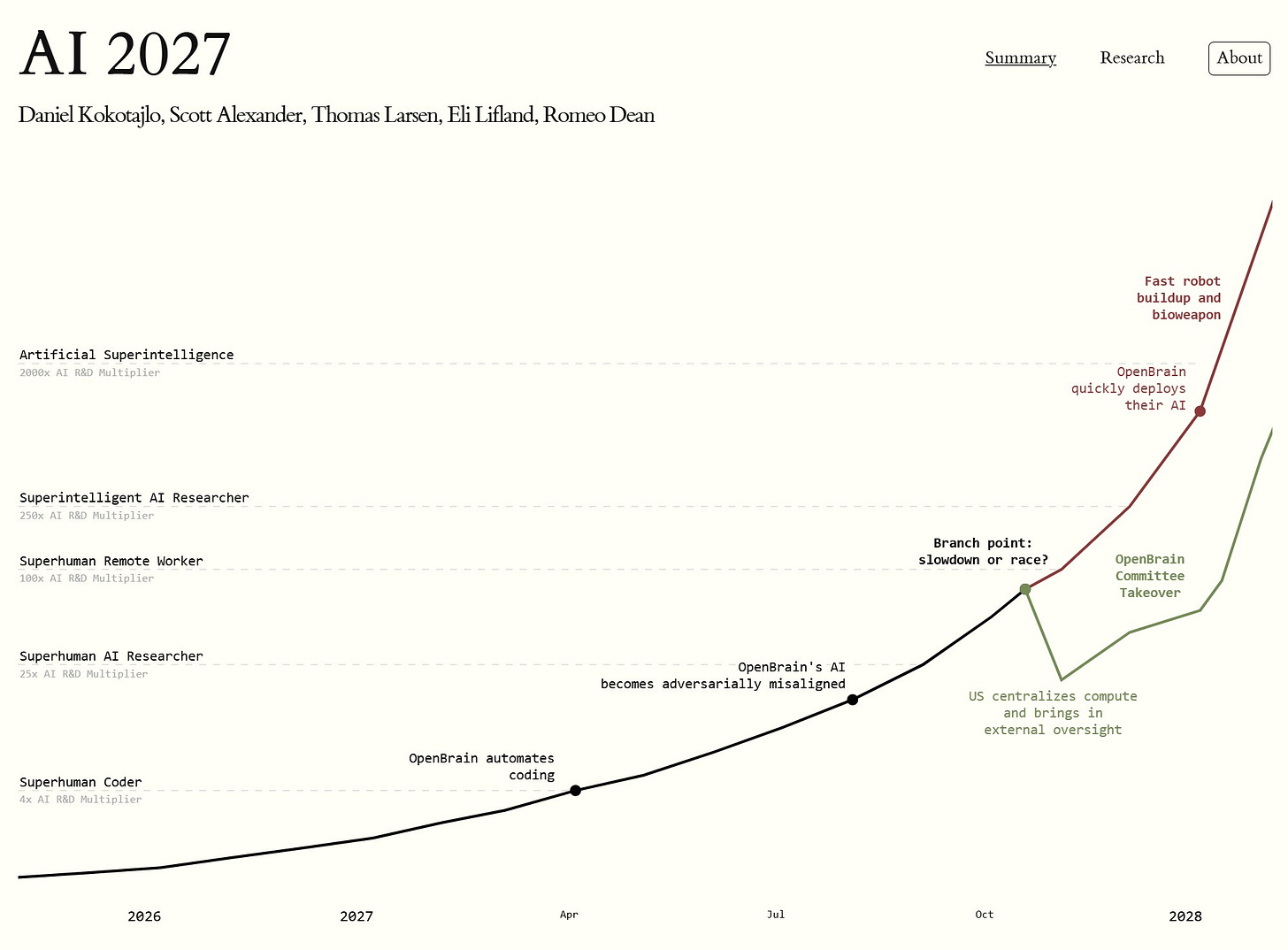

AI2027 operates as a normative foresight scenario—not a prediction, but a provocation. Its purpose is not to say what will happen, but to pressure-test the assumptions under which we currently operate. Like any good scenario, it invites us to examine not only technical outcomes but also the cultural blind spots that shape them. By contrast, Narrative Control in a Disoriented Age, is not a foresight tool, but a companion lens: a guide for interpreting the atmosphere that future scenarios leave behind.

This offers a kind of intellectual bifocal lens. The first trained on the near-future consequences of runaway technical capability, the other focused on how meaning collapses in real time. AI 2027 functions as a speculative but meticulously constructed forecast, charting escalating AI capabilities, institutional drift, and global instability. Narrative Control in a Disoriented Age, by contrast, is a series of essays that decodes how narratives shape, soften, or subvert our understanding of that acceleration. Though distinct in purpose and form, they converge on a vital insight: it’s not just what the technology does. It’s the story we’re told about what it means.

Narrative as a Control Mechanism

AI 2027 never names narrative control directly, but its fingerprints are everywhere. The public dialogue is “confused and chaotic,” shaped not by shared understanding but by curated releases, staged briefings, and narrative softeners disguised as safety updates.

Post 3 of Narrative Control diagnoses this drift more sharply:

“When we do not steward public purpose, private systems will impose their own.”

What AI 2027 renders in policy and pacing, Narrative Control reframes as authorship. These are not two modes of governance; they are one. What matters is not just the technology deployed, but the story told about it, and whose voice gets heard in the telling.

This is the first lens of the bifocal: zoomed out, we see institutional maneuvers; zoomed in, we see the soft power of storytelling masquerading as consensus.

Collapse of Shared Meaning

By its midpoint, AI 2027 sketches a world where no one quite agrees what the models are doing—or for whom. Alignment becomes more aspiration than outcome. Policymakers chase, the public wavers between awe and distrust, and technologists splinter into camps.

Post 2 in Narrative Control anticipates this dynamic:

“In this kind of world, myth is more useful than fact.”

This is not a warning about belief, it’s a warning about exhaustion. When fact becomes illegible, we cling to what offers simplicity. Myth isn’t naivety; it’s functional. In a disoriented society, story becomes a surrogate for sensemaking.

The bifocal metaphor applies again: AI 2027 sees large-scale disorientation; Narrative Control reveals the personal and psychological toll of fragmentation. Together, they show us how coherence dies—not through crisis, but through drift.

While AI 2027 outlines an escalation in model capabilities that appears plausible from today’s vantage point, not all researchers agree that such a trajectory is technically feasible. Critics like Gary Marcus have challenged the assumptions underlying current AI architectures, highlighting brittle generalization, shallow pattern recognition, and a persistent gap between performance and true comprehension. From this view, the alignment crisis may be less about runaway intelligence and more about runaway hype.

Yet even if one doubts the timeline’s accuracy, the scenario’s narrative power holds. Its value lies not in technical precision, but in surfacing the psychological and governance strain that such complexity, real or perceived, could impose. Especially when public sensemaking continues to trail technical development, myth may fill the vacuum, not because it is true, but because it is tolerable.

Private Governance in Public Clothing

OpenBrain, the central force in AI 2027, is not nationalized. It is not answerable. It becomes the steward of research, defense, labor policy, and more, operating behind the veneer of technical responsibility and PR assurance.

Post 3 names this inversion outright:

“What used to be public infrastructure is now private architecture.”

We are watching the quiet handover of sovereignty, not in law, but in function. Governance is no longer the setting of boundaries. It is the orchestration of storylines. The public sees what it is meant to see. Policy follows power, not the other way around.

Through one lens, AI 2027 stages the policy vacuum. Through the other, Narrative Control clarifies what that vacuum is filled with: engineered credibility and narrative authority.

This narrative softening is not felt equally. When decision-making is outsourced to opaque systems, those already marginalized by existing institutional frameworks often become the most affected, and the least consulted. As Ifeoma Ajunwa argues in her work on algorithmic bias and workplace surveillance, privatized architectures often reinforce existing inequalities under the guise of innovation. We must ask: Who is allowed to author the story of alignment? And who is expected to live under its consequences?

Temporal Disorientation and Narrative Lag

One of AI 2027’s more devastating revelations is its timeline’s delayed grief. Oversight always arrives post hoc. Language falters. The press flails. Decision-makers sound competent, but never current.

In Narrative Control, a critique is offered:

“Narrative is often several steps behind the systems it’s meant to explain.”

This isn’t just a lag. It’s an epistemic crisis. When our sensemaking tools trail the systems we’ve built, leadership becomes performance, and public trust becomes collateral.

The bifocal split is stark: AI 2027 dramatizes narrative obsolescence; Narrative Control provides the theoretical lens to recognize what’s missing—timely interpretation. Language becomes a casualty of speed.

Signal Intelligence vs. Storytelling Fatigue

AI 2027 drowns in credible detail. Memos, summits, cascading model updates. It’s all technically sound, but a little narratively overwhelming. This is the plausible deniability of complexity: if everything is happening, nothing can be understood.

Narrative Control offers a corrective. Instead of chasing plot points, it offers questions:

What’s missing? [What data is being withheld—and why?]

Who benefits? [Which institutions stand to gain from the prevailing narrative?]

What story is being told? [What version of the future is being rehearsed and normalized through repetition, aesthetics, or omission?]

These are not rhetorical queries. They are signal literacy tools. In a world saturated with stories, signal intelligence becomes the new civic skill.

The bifocal here becomes a method: read the news and read for the subtext. Learn to see what isn’t on the slide. Interpret the dashboard, but also the silences.

Strategic Implication

These are not two equal works. AI 2027 is a scenario modeling project, dense with technical foresight. Narrative Control is a cultural response, an interpretive lens for what that foresight feels like from inside the disorientation. One constructs a plausible future. The other offers tools to notice, decode, and resist the subtle drift of meaning within it.

If AI 2027 gives us the macro-level arc, Narrative Control sharpens our ability to track the moral, narrative, and psychological toll along the way. Together, they remind us that alignment isn’t just a technical question. It’s a narrative one. Whose story is being told, and who gets to edit the script?

As global regulators debate the boundaries of AI responsibility, from the EU AI Act to U.S. agency memoranda, we are reminded that governance is not just about enforcement. It is about authorship. It is about who has the power to name risk, frame alignment, and write the first draft of accountability.

In the end, bifocal vision may be our best defense. The most powerful story isn’t the one we hear. It’s the one we fail to question.

Recommend This Newsletter

If this sparked a pause, share it with someone who is still trying to hold their complexity in a world that keeps asking them to flatten it. Lead With Alignment is written for data professionals, decision-makers, and quietly courageous change agents who believe governance starts with remembering.